In recent years, chatbots have been the talk of the town. Large language models (LLMs) and AI have become the shining stars of technology. Consequently, web scraping has taken center stage due to AI's heavy reliance on vast troves of scraped data. However, website owners often view web scraping as a double-edged sword. They deploy various defenses against scrapers, leading to a cat-and-mouse game. To stay one step ahead and avoid being blocked, many web scraping solutions now employ IP proxies to improve scraping efficiency.

How Websites Defend Against Scrapers

When a scraper extracts data from a website, it sends requests to the server to fetch the HTML content of a page. Think of it as a courier delivering a package. If this courier sends too many requests too quickly, it could overwhelm the server—akin to a traffic jam that brings the site to a halt. Hence, websites implement several countermeasures to fend off scrapers.

The most common tactic is limiting the access rate for any single IP. For example, if a scraper sends excessive requests from one IP address, the website will catch on and eventually block that IP. To dodge such pitfalls, it’s wise to avoid scraping with a single IP address. This is where proxy servers come into play, acting like a stealthy sidekick in your scraping endeavors.

What Is A Proxy Exactly

A proxy acts "on behalf of another", serving as an intermediary between users and the Internet. Imagine it as a trusted middleman that manages requests, enhances security, and caches data for quicker access.

When a computer connects to the Internet, it uses an IP address—like a home address directing incoming data. A proxy server also has its own IP address. When users employ proxy servers for web requests, all requests first funnel through the proxy. This means the proxy evaluates the request, forwards it, and then relays the response back to the user. Depending on your needs, proxy servers can offer varying levels of functionality, security, and privacy.

Why IP Proxies Are Crucial for Web Scraping

Website owners are often on high alert for suspicious activity. If you scrape data using your own IP, you risk getting blocked faster than you can say "data collection failure." IP proxies mitigate this issue substantially.

Navigating Around IP Blocking

Websites are adept at monitoring incoming requests, blocking IPs that exhibit suspicious behavior. Making too many requests in quick succession is a red flag. IP proxies allow scrapers to spread requests across multiple addresses, much like diversifying your investments across different assets to minimize risk and maximize potential returns. For instance, if one IP hits its limit and gets blocked, others can continue the task, ensuring smooth sailing.

Overcoming Geo-Location Barriers

Some websites impose access restrictions based on users’ locations. If you need to collect data from a site that limits access based on geography, IP proxies can save the day. By using proxies located in the same region as the target site, you can easily bypass these restrictions, getting your hands on the data you need without breaking a sweat.

Keeping Your Scraping Activities Undercover

Frequent scraping from a single IP makes it easy for websites to track your activity, potentially leading to legal headaches. Proxies help you keep your cards close to your chest, masking your real IP and making requests appear as if they come from the proxy. It’s like wearing a disguise in a crowded room.

Optimizing Request Management

Many websites have built-in mechanisms to detect excessive request rates. Proxy pools help you distribute requests evenly across multiple IPs, allowing for effective management of request rates and reducing the likelihood of getting blocked.

With these benefits, IP proxies can turbocharge your data collection efforts, handling large-scale scraping tasks more efficiently. Many web scraping service providers have recognized this trend and integrated proxy features into their tools.

Web Scraping with Proxies

Using a web scraping tool that incorporates IP proxies is a smart move, especially when tackling websites with anti-scraping measures. Octoparse stands out as a leading web scraping solution offering robust IP proxy features.

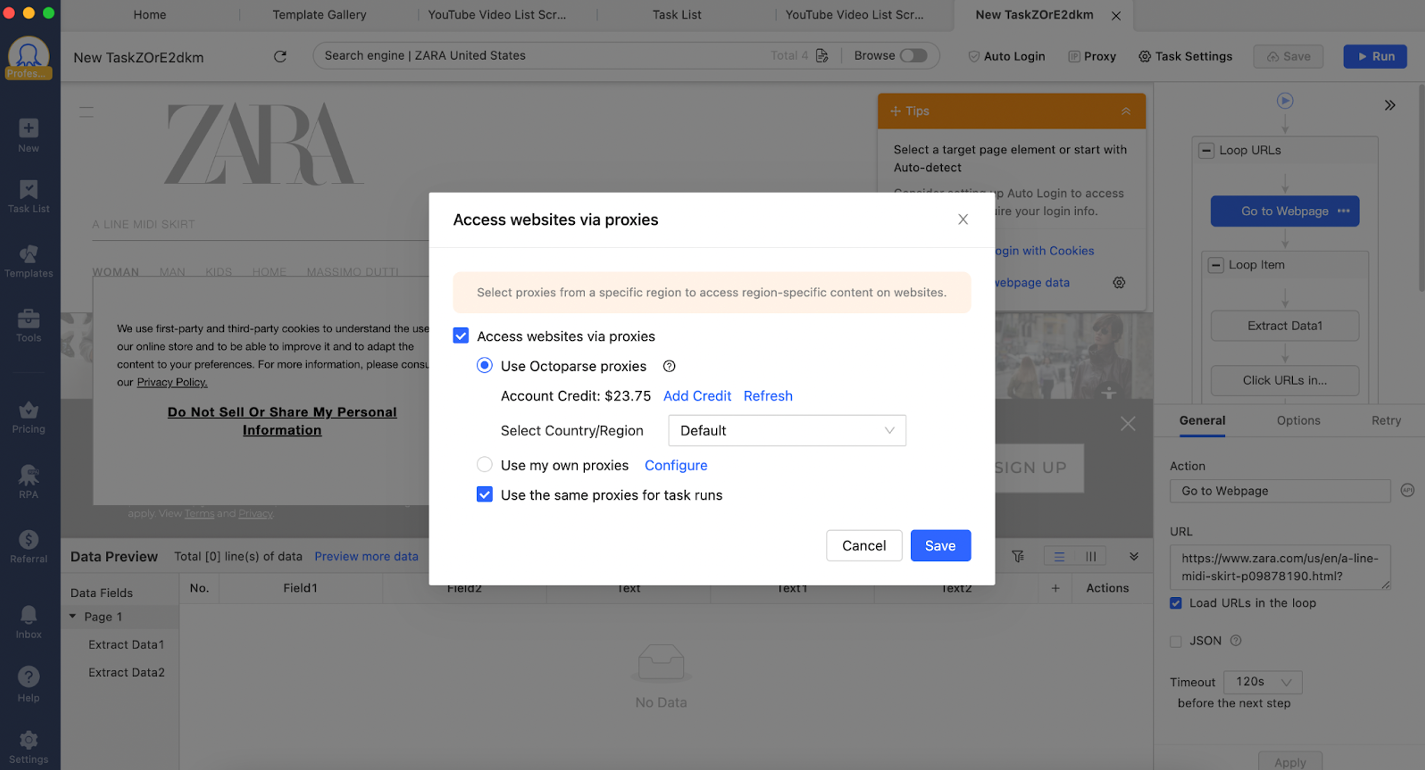

Octoparse is a powerful, free web scraping tool capable of extracting data from most mainstream websites globally. Its cloud-based data extraction operates with a vast pool of cloud IP addresses, minimizing the chances of getting blocked while safeguarding your local IP. When using Octoparse, you can easily set up built-in proxies, which are residential IPs designed to evade detection effectively. You even have the option to select IPs from specific regions for websites that enforce location-based access. Plus, if you have your own IP proxies, you can seamlessly integrate them into Octoparse.

Wrap Up

Strategically using IP proxies enhances the effectiveness and legality of web scraping, allowing you to sidestep hurdles like getting blocked, thereby optimizing data extraction performance. Setting up IP proxies on Octoparse is a breeze, making your data collection efforts not just efficient but also worry-free.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment