Managing servers is a complex task—you need to ensure uptime, optimize performance, and maintain security. Server monitoring tools serve as an essential extra set of eyes, helping IT teams and businesses detect issues before they lead to costly downtime.

This guide will cover why server monitoring is essential, key features of monitoring tools, and the best available options to help you choose the right solution for your needs.

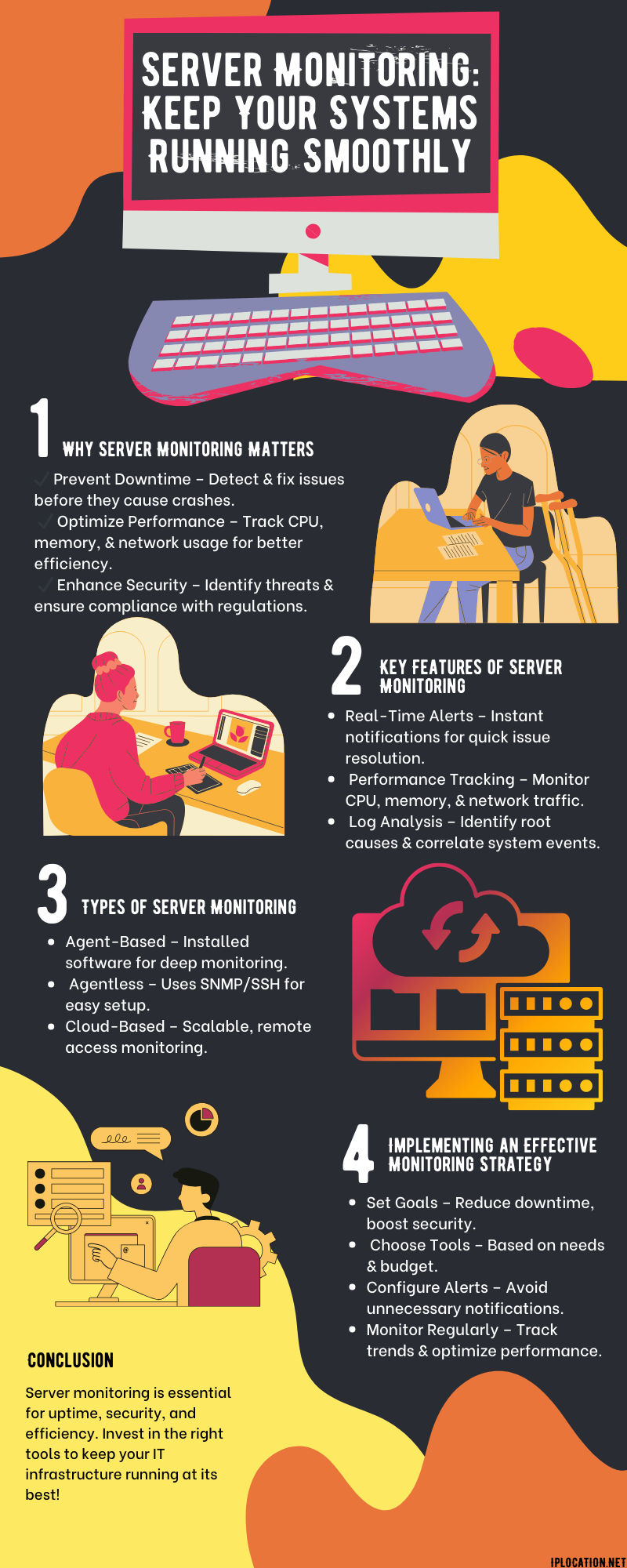

Why Server Monitoring Matters

Preventing Downtime

Unplanned downtime can lead to revenue loss, decreased productivity, and a poor user experience. Proactive monitoring detects potential issues before they disrupt operations. A sudden spike in CPU usage or memory consumption can indicate troubles. Monitoring tools provide real-time alerts about system failures, hardware issues, or performance bottlenecks, allowing IT teams to act swiftly and prevent disruptions.

Optimizing Server Performance

Server monitoring tools analyze CPU, memory, disk I/O, and network traffic, ensuring that resources are allocated efficiently. This helps improve server response times and overall system stability.

Enhancing Security

Servers are prime targets for cyberattacks. Monitoring tools identify suspicious activities such as unauthorized access attempts or DDoS attacks, helping businesses stay compliant with GDPR, HIPAA, and other regulations.

Key Features of Server Monitoring Tools

Real-Time Monitoring & Alerts

- Immediate notifications via email, SMS, or dashboards

- Customizable alerts to avoid false alarms

Performance Metrics Tracking

- Monitors CPU, memory, disk usage, and network activity

- Detects bottlenecks and optimizes resource allocation

Log Analysis & Event Correlation

- Collects and analyzes logs for root cause identification

- Helps IT teams correlate system-wide events to fix issues quickly

Types of Server Monitoring Solutions

Server monitoring solutions come in various forms, each designed to address specific needs based on infrastructure setup, security requirements, and performance tracking. The choice between different monitoring types depends on the depth of insights required, ease of implementation, and system resource consumption. Below are three of the most commonly used server monitoring methods:

1. Agent-Based Monitoring

Agent-based monitoring is one of the most comprehensive ways to track server performance and system health. This method requires installing monitoring agents—specialized software components—directly onto the server. These agents continuously collect data on CPU usage, memory consumption, disk performance, and network activity. Because the agents operate within the system, they provide real-time, in-depth insights that help IT teams detect potential issues early, such as resource bottlenecks, failing hardware, or security breaches. However, agent-based monitoring can also consume system resources, which may slightly impact performance, especially on resource-limited servers. This approach is best suited for enterprises that require detailed performance analysis and proactive issue resolution across complex IT infrastructures.

2. Agentless Monitoring

In contrast to agent-based monitoring, agentless monitoring does not require software installation on individual servers. Instead, it relies on remote data collection techniques using standard protocols such as SNMP (Simple Network Management Protocol), SSH (Secure Shell), or WMI (Windows Management Instrumentation) for Windows-based systems. This method is particularly useful for organizations that need lightweight, hassle-free monitoring with minimal system impact.

Agentless monitoring is easier to implement and maintain since it eliminates the need for software updates and agent configuration on every server. However, it may lack the depth of insight provided by agent-based monitoring, as it primarily focuses on high-level performance metrics rather than granular system-level data. Businesses that require basic performance tracking without deploying additional software often prefer agentless monitoring.

3. Cloud-Based Monitoring

With the increasing adoption of cloud computing, cloud-based monitoring has become essential for businesses operating in hybrid or fully cloud-based environments. These solutions are hosted on third-party platforms and provide remote access to real-time monitoring dashboards, enabling IT administrators to track server performance from anywhere.

Cloud-based monitoring tools typically offer automated updates, seamless scalability, and integration with popular cloud service providers like AWS, Azure, and Google Cloud. One of the key advantages of cloud-based monitoring is its ability to analyze distributed infrastructure and provide centralized insights, reducing the complexity of managing multiple on-premises and cloud servers. However, since data is stored offsite, security considerations must be taken into account, and businesses should ensure compliance with data protection regulations. This monitoring approach is ideal for organizations that require flexible, scalable, and cost-effective solutions without the burden of maintaining on-premises monitoring infrastructure.

Top 10 Server Monitoring Tools

| Tool | Best For | Pricing | Rating |

|---|---|---|---|

| PRTG | Customizable monitoring dashboards | From $2,149/year | 4.7/5 |

| Checkmk | Large-scale server monitoring | From $225/month | 4.7/5 |

| NinjaOne | Endpoint management & patch compliance | Pricing on request | 4.8/5 |

| Site24x7 | Cloud-based tracking | From $39/month | 4.6/5 |

| OpManager | Continuous tracking | From $20/user/month | 4.3/5 |

| Dynatrace | AI-powered application performance | From $21/user/month | 4.5/5 |

| LogicMonitor | Hybrid infrastructure monitoring | From $22/resource/month | 4.5/5 |

| Nagios | Comprehensive IT infrastructure monitoring | From $20/user/month | 4.6/5 |

| New Relic | Real-time application analytics | Pricing on request | 4.3/5 |

| AppDynamics | End-to-end business transaction tracking | From $6/core/month | 4.3/5 |

How to Implement an Effective Server Monitoring Strategy

Implementing an effective server monitoring strategy requires careful planning, the right tools, and ongoing optimization. By following these steps, businesses can ensure their servers run smoothly, remain secure, and deliver optimal performance.

1. Define Monitoring Goals

Before implementing any monitoring solution, organizations should establish clear objectives. Ask the following questions:

- What are the key performance indicators (KPIs) that need tracking? (e.g., CPU usage, memory utilization, network traffic)

- Are we primarily concerned with uptime, security threats, or resource optimization?

- Do we need monitoring for physical, virtual, or cloud-based servers?

Setting clear goals helps in choosing the right tools and establishing meaningful alert thresholds to prevent false positives or overlooked critical issues.

2. Choose the Right Monitoring Tools

With numerous monitoring tools available, selecting the right one depends on factors such as:

- Scalability: Can the tool handle an expanding infrastructure?

- Integration: Does it integrate with existing IT tools like ticketing systems, log analyzers, and automation platforms?

- Real-time Monitoring: Does the tool provide real-time dashboards and notifications?

- Automation & AI Capabilities: Does it use machine learning for predictive maintenance?

Some of the best server monitoring tools include PRTG, Checkmk, Site24x7, and Nagios. These tools provide varying levels of depth, from basic resource tracking to AI-powered predictive analytics.

3. Configure Alerts & Thresholds

Alerts notify IT teams about issues such as high CPU usage, application crashes, or security breaches. To avoid alert fatigue, configure alerts strategically:

- Set thresholds for normal, warning, and critical levels.

- Prioritize alerts based on impact (e.g., a minor memory spike vs. a database failure).

- Customize notifications (e.g., send urgent alerts via SMS, but low-priority issues via email).

Proper alert configuration ensures IT teams can respond effectively without being overwhelmed by unnecessary notifications.

4. Conduct Regular Monitoring & Reporting

Monitoring isn’t just about real-time alerts—it’s also about analyzing trends. Regular performance reports provide insights into:

- Recurring issues (e.g., frequent server overloads at peak hours).

- Long-term resource consumption (helps plan hardware upgrades).

- Security vulnerabilities (e.g., repeated unauthorized access attempts).

These reports should be reviewed periodically to refine monitoring strategies and optimize server configurations.

5. Implement Automated Remediation

Many modern monitoring tools allow for automated responses to common issues. For example:

- Automated Server Restarts: If an application crashes, the system can auto-restart it.

- Scaling Resources: Cloud-based services can auto-scale resources based on demand.

- Load Balancing: Traffic can be redirected to prevent server overload.

Automation reduces downtime and minimizes manual intervention, improving operational efficiency.

6. Optimize & Scale Resources

Server monitoring data can reveal underutilized or overburdened resources. Use these insights to:

- Reallocate workloads to balance resource usage.

- Decommission underutilized servers to save costs.

- Upgrade hardware or shift to cloud-based solutions if resource consumption grows.

Regular optimizations ensure the infrastructure remains cost-effective and capable of handling future demands.

7. Test & Update Monitoring Configurations Regularly

As IT environments evolve, monitoring strategies must adapt. Regularly review and update:

- Threshold levels for alerts (e.g., increasing CPU limits for new applications).

- Monitoring tool integrations (adding new servers or services).

- Security settings to address new threats.

Periodic testing ensures that monitoring tools remain effective and aligned with business goals.

Final Thoughts

By implementing a well-structured server monitoring strategy, businesses can reduce downtime, improve efficiency, and enhance security. Server monitoring tools are essential for maintaining uptime, optimizing performance, and securing your IT infrastructure. Whether you need on-premises monitoring, cloud-based solutions, or AI-powered analytics, the right tool depends on your business size, budget, and IT requirements.

Images by Freepik.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment