Convolutional Neural Networks are among the most influential and widely applied technologies in the ever-changing field of artificial intelligence, particularly in development services related to computer vision. Originating from the way an animal's visual cortex works, CNNs have changed the way machines perceive and process visual information. On learning how to detect patterns, shapes, and structures in images, CNNs achieve complex tasks that were otherwise believed to be an exclusively human form of vision.

How CNNs Work

At the core of CNNs are three fundamental types of layers: convolutional layers, pooling layers, and fully connected layers. These layers work together to automatically and adaptively learn spatial hierarchies of features from input images, making CNNs a crucial component in computer vision development services.

Convolutional Layers

These layers convolve the input image with one set of filters (or kernels) to produce feature maps. Every filter detects some features, whether edges, textures, or patterns, in the image.

Pooling Layers

Pooling layers are used to reduce the dimensionality of feature maps while retaining their most important information. Down-sampling performed by a pooling layer allows the network to be more invariant to shifts and rotations in the input image.

Fully Connected Layers

Output from some of the convolutional and pooling layers is then flattened and fed into fully connected layers, which are supposed to perform ultimate classification or regression tasks according to extracted features.

CNN Architecture

A typical CNN will have numerous different layers, each with some specific functionality while extracting features and classifying them. All these layers jointly transform the input data into meaningful patterns and eventually into predictions, making them very effective in tasks like image recognition and object detection. For a good result in the development of your project, it is better to use the service of Data Science UA professionals in this field. Here's a breakdown of a basic CNN architecture:

- Input Layer: receives the raw image data, usually fed as pixel values, and makes it ready for processing for the next layers.

- Convolutional Layer: A number of filters are applied to scan the input image to identify edges, textures, or other shapes. The output will be feature maps, which display different aspects of the input image.

- ReLU Activation Function: Introduce non-linearity into the model to enable the learning or modeling of complicated patterns within the data by the network. Without this non-linearity, the network would learn only linear relationships.

- Pooling Layer: Since this layer reduces the size of the feature maps through down-sampling, thus reducing the computational load, it makes the network more robust against small translations in the input image. This layer ensures that only the most important information is kept; hence, this will help make the model invariant to distortions and translations.

- Fully Connected Layer: After the reduction and processing of feature maps, they are flattened into a vector and passed through one or more fully connected layers, which finally combine the features learned from the convolutional and pooling layers into some final output, such as a class label or a probability distribution over multiple classes.

- Output Layer: It is the last layer of the network that gives the prediction. For classification problems, it is usually a softmax layer giving probabilities of each class, letting the model decide upon the input image.

Training CNNs

The major steps for training a CNN are:

- Forward Propagation: The image input is fed forward through the network to generate the prediction at the output layer.

- Loss Function: It calculates the difference between the predicted output and what the true label is. Commonly used loss functions are cross-entropy for classification tasks and mean squared error for regression tasks.

- Backpropagation: The error is then backpropagated through the network, updating the weights of filters and neurons to decrease the loss.

- Optimization: Gradient Descent or Adam's Optimizer is used to update the model's parameters and enable it to learn itself from the data.

Applications of CNNs

CNNs have deepened their roots in many fields of Technology related to image processing. Some of the most prominent applications include:

- Image Recognition: CNN is at the root of Systems that classify images; for example, identifying objects in photos or detecting handwritten digits.

- Object Detection: Beyond simple object recognition, CNNs are applied in systems capable of detecting and classifying multiple objects within one image; this function is central to autonomous vehicles.

- Facial Recognition: Most modern security systems in use today incorporate CNNs to identify and verify individuals based on their facial features.

- Medical Image Analysis: CNNs help diagnose diseases by analyzing medical images like X-rays and MRIs.

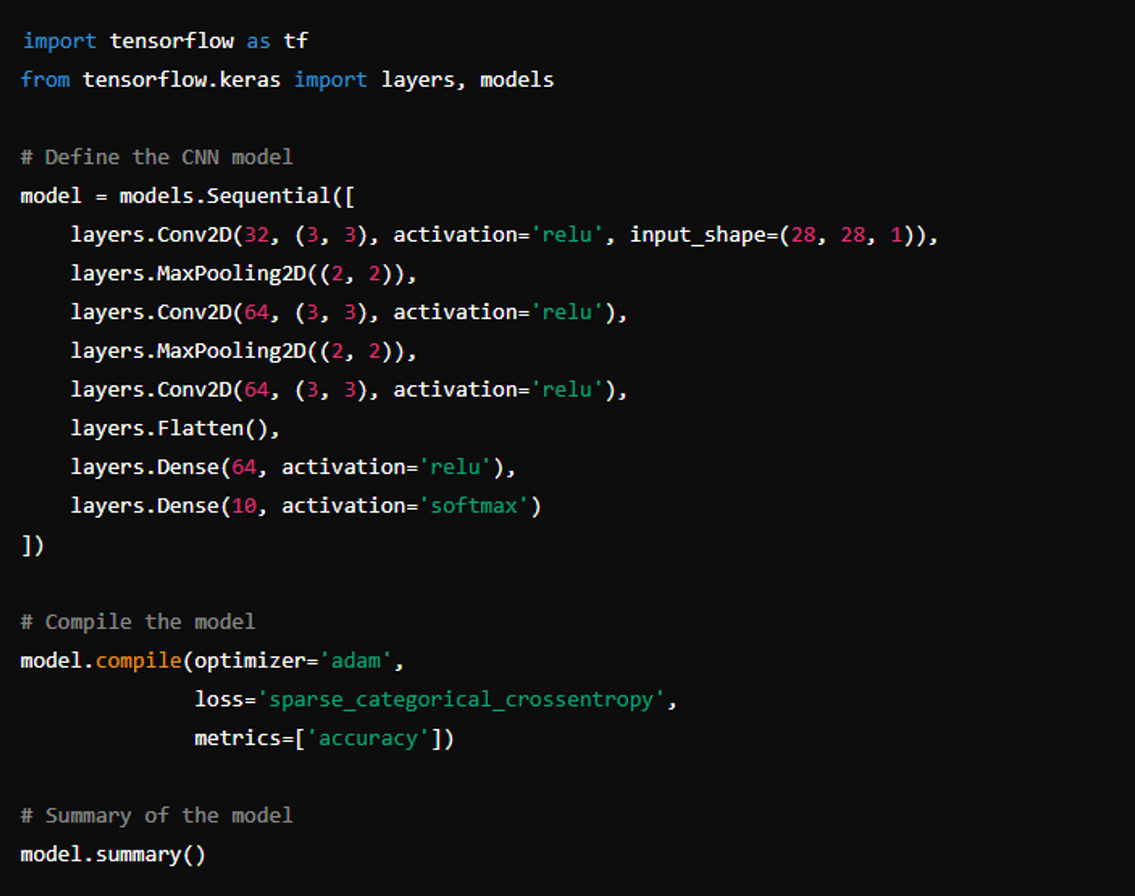

Building a Simple CNN Model

Now, let us understand precisely what is a CNN by walking through the process of creating a simple model of a CNN in TensorFlow.

The result will be a very basic model of CNN destined to solve image classification problems. It will be able to recognize digits from the MNIST dataset. There are three convolutional layers followed by max-pooling layers at the end and two fully connected layers.

Future Trends in CNNs

The field of CNNs is rapidly evolving, with several exciting trends on the horizon:

- Advanced Architectures: The ResNet and EfficientNet networks have changed the way of deepening and making a CNN more efficient, sometimes resulting in better performance with fewer parameters.

- Reinforcement Learning: CNNs are being combined into reinforcement learning settings and now support far more complex decision-making, particularly in the domains of robotics and gaming.

- Transfer Learning: That learned features can be transferred to another task is what has more flexibly made the application of CNNs possible, mainly when data is limited.

Conclusion

Convolutional Neural Networks have revolutionized computer vision, incarnating life into the automation of tasks earlier thought to require human intelligence. Only time will tell, but the future of CNNs is promising to lead to a more significant role than ever in AI and further innovation in domains such as healthcare and autonomous systems. Already attested, their high-accuracy ability in analyzing and interpreting complex visual data has shed light on medical imaging and real-time object recognition. Further still, with advanced CNNs will come enhanced capabilities in areas like augmented reality and precision agriculture, unlocking new possibilities for industries worldwide.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment